YOLOv7 was released in July 2022 by WongKinYiu and AlexeyAB. It achieves state of the art performance on and are trained to detect the generic 80 classes in the MS COCO dataset for real-time object detection.

There are six versions of the model ranging from the namesake YOLOv7 (fastest, smallest, and least accurate) to the beefy YOLOv7-E6E (slowest, largest, and most accurate).

The differences between the different sizes of the model are:

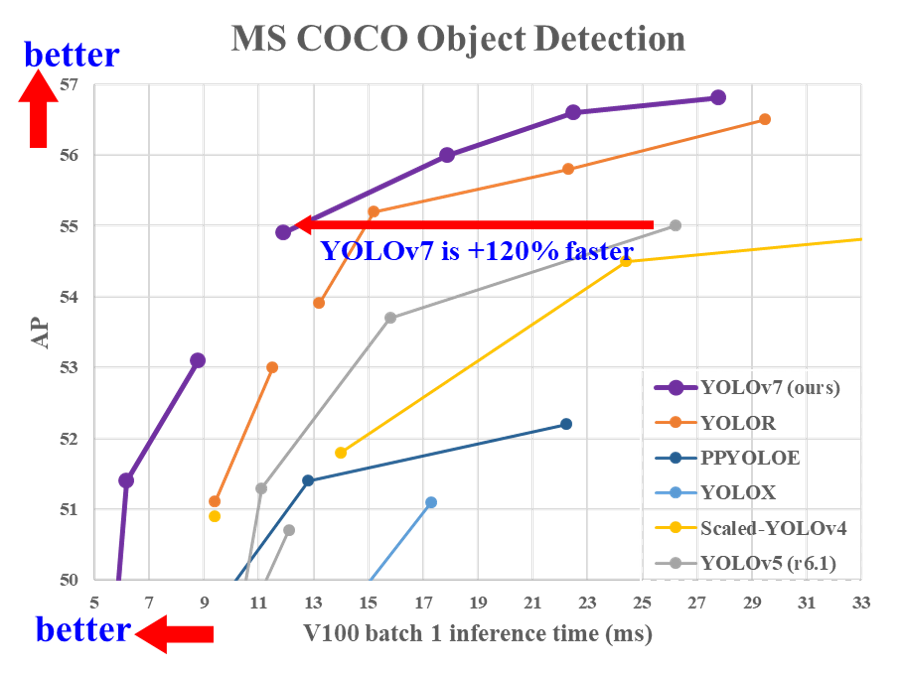

The evaluation of YOLOv7 models show that they infer faster (x-axis) and with greater accuracy (y-axis) than comparable realtime object detection models. YOLOv7 evaluates in the upper left - faster and more accurate than its peer networks.

| Model | Test Size | APtest | AP50test | AP75test | batch 1 fps | batch 32 average time |

|---|---|---|---|---|---|---|

| YOLOv7 | 640 | 51.4% | 69.7% | 55.9% | 161 fps | 2.8 ms |

| YOLOv7-X | 640 | 53.1% | 71.2% | 57.8% | 114 fps | 4.3 ms |

| YOLOv7-W6 | 1280 | 54.9% | 72.6% | 60.1% | 84 fps | 7.6 ms |

| YOLOv7-E6 | 1280 | 56.0% | 73.5% | 61.2% | 56 fps | 12.3 ms |

| YOLOv7-D6 | 1280 | 56.6% | 74.0% | 61.8% | 44 fps | 15.0 ms |

| YOLOv7-E6E | 1280 | 56.8% | 74.4% | 62.1% | 36 fps | 18.7 ms |

You can run fine-tuned YOLOv7 object detection models with Inference.

First, install Inference:

pip install inference

Retrieve your Roboflow API key and save it in an environment variable called ROBOFLOW_API_KEY:

export ROBOFLOW_API_KEY="your-api-key"

To use your model, run the following code:

import inference

model = inference.load_roboflow_model("model-name/version")

results = model.infer(image="YOUR_IMAGE.jpg")

Above, replace:

YOUR_IMAGE.jpg with the path to your image.model_id/version with the YOLOv8 model ID and version you want to use. Learn how to retrieve your model and version ID